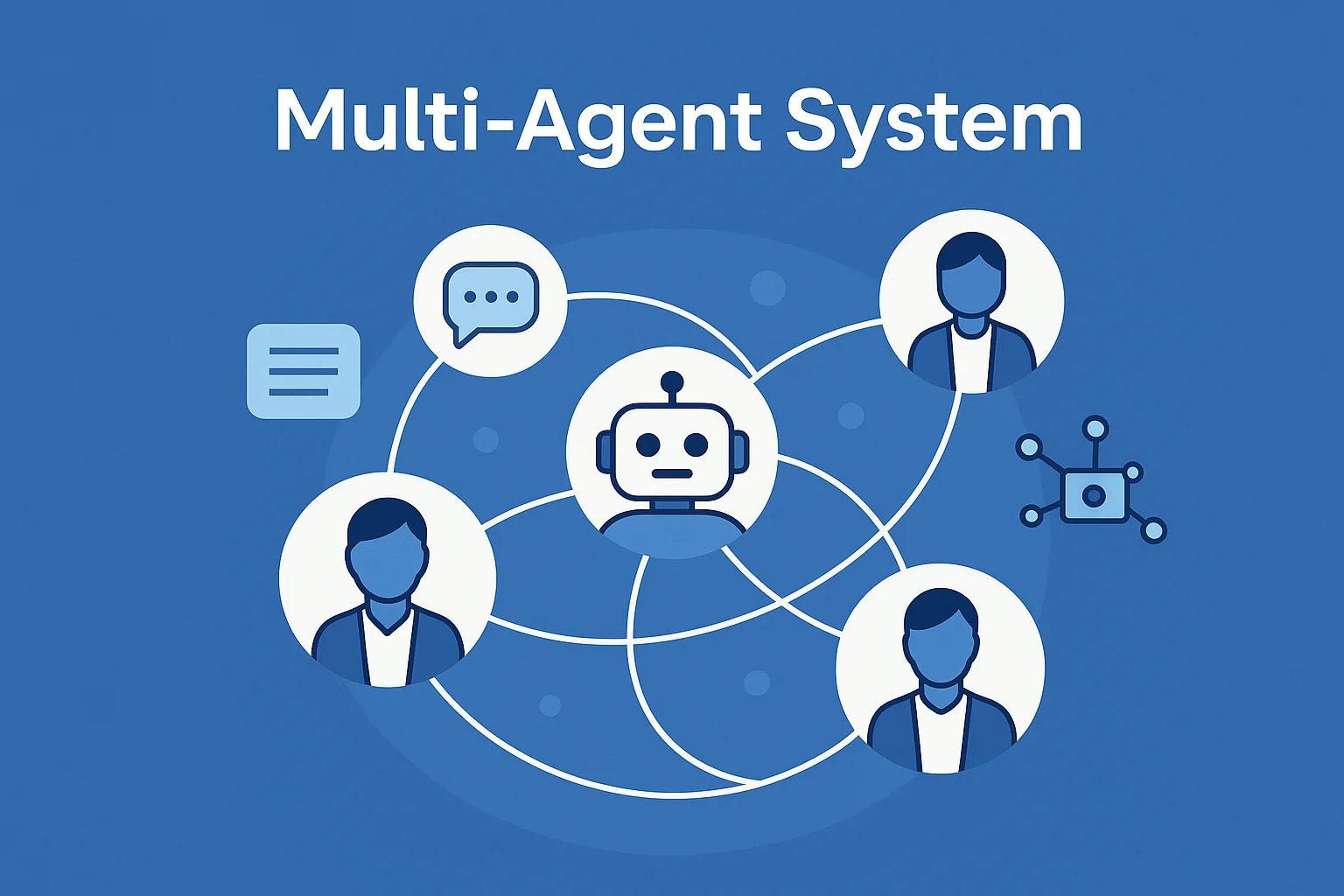

Artificial intelligence is shifting from single smart assistants to teams of agents that can collaborate, specialize, and solve problems together.

Instead of one giant model doing everything, we’re starting to see:

- A Research Agent that gathers information

- A Planner Agent that decides on steps

- A Developer Agent that writes code

- A Reviewer Agent that checks the result

All of these agents talk to each other, share context, and work toward a common goal.

This is exactly what Multi-Agent Systems (MAS) are about.

In this blog, we’ll break down:

- What multi-agent systems are

- The core building blocks (agents, environment, communication)

- Different types of multi-agent interactions (cooperative, competitive, mixed)

- Why multi-agent designs are becoming so important in AI

- Real-world examples and use cases

- How LLM-based agents fit into the MAS world

- Key challenges and design tips

1. What Is a Multi-Agent System?

A multi-agent system (MAS) is a system made up of multiple interacting agents, each with:

- Its own goals or role

- Its own knowledge or perspective

- Its own ability to act in an environment

An “agent” can be:

- A software program (AI assistant, script, bot)

- A robot

- Even a human participant in a larger system

In a MAS:

- Agents sense their environment

- They decide what to do based on their goals and information

- They act and communicate with each other

Instead of one centralized brain controlling everything, intelligence is distributed across many agents.

Simple analogy:

Think of a sports team. No single player controls the whole game. Each player has a position, local information, and their own decisions—but together they form a strategy and win (or lose) as a group. That’s a multi-agent system in action.

2. Core Building Blocks of Multi-Agent Systems

To understand MAS, it helps to break it into a few basic components.

Agents

An agent is an autonomous entity that can:

- Perceive (observe some part of the world)

- Decide (choose an action)

- Act (do something that changes the environment or sends a message)

Agents might be:

- “Dumb” but fast (e.g., a simple rule-based bot)

- “Smart” and heavy (e.g., a large language model)

Environment

The environment is the world in which agents operate. It can be:

- A physical space (for robots, drones, vehicles)

- A digital environment (APIs, databases, game worlds)

- A hybrid (IoT devices, smart factories, etc.)

Agents rarely see the entire environment. They might have:

- Partial information

- Delays or noise

- Different views depending on their role

Interaction & Communication

Agents interact in two main ways:

1.Indirectly, via the environment

-

- One agent changes the environment; others notice the change.

- Example: multiple trading bots interacting through a market’s price movements.

2. Directly, via communication

-

- Agents send messages to each other (text, signals, APIs).

- Example: a planning agent sends a “task” to a worker agent.

Technical Insight: A multi-agent system is often modeled as a set of agents plus a shared state (environment). Each agent runs a loop:

observe → decide → act → communicate → observe again.

Because all agents are doing this in parallel, complex global behavior can “emerge” from many local decisions.

For knowing more about Multi Agents Visit BotCampusAi-Workshop

3. Types of Multi-Agent Interactions

Not all agents are friends. MAS can involve cooperation, competition, or both.

Cooperative Systems

Agents share a common goal and work together.

- Example: drones coordinating to scan a disaster area and find survivors.

- Example: a team of AI assistants handling different parts of a workflow (research, summarization, QA).

Cooperation requires:

- Shared or aligned objectives

- Ways to coordinate (plans, protocols, roles)

- Sometimes a leader or “manager” agent

Competitive Systems

Agents have conflicting goals.

- Example: trading bots in a financial market

- Example: opponent AIs in a game (e.g., multi-agent reinforcement learning in StarCraft, Dota, etc.)

Here, agents:

- Try to maximize their own payoff

- Sometimes model or predict other agents’ strategies

Mixed (Coopetition) Systems

Real life is often a mix:

- Agents cooperate within a team but compete with other teams.

- Or they cooperate for some tasks and compete for others (e.g., resource allocation).

Technical Insight: Game theory is a classic mathematical tool for modeling competitive and mixed multi-agent systems: payoff matrices, Nash equilibria, auctions, bargaining, etc. In modern AI, these ideas appear in multi-agent reinforcement learning and economic simulations.

4. Why Multi-Agent Systems Matter (More Than Ever)

So why are multi-agent systems becoming such a big topic in 2025?

Scalability

One giant model can’t always:

- Know everything

- Handle all tasks

- Scale linearly with problem complexity

Splitting work across multiple agents lets you:

- Parallelize tasks

- Specialize roles

- Swap or upgrade parts independently

Specialization

You can design agents that are really good at one thing:

- A “Data Retrieval Agent” for searching and filtering

- A “Code Generation Agent” for writing and refactoring code

- A “Compliance Agent” for checking outputs against rules

Specialization often leads to better quality than one generic agent trying to do everything.

Robustness & Flexibility

If you design your system as multiple agents:

- You can replace or update one agent without rewriting the whole system

- You can add new agents (e.g., a new tool/skill) easily

- The system can adapt when one part fails (fallback agents, backup strategies)

Closer to How Humans Work

Organizations, teams, markets, and societies are already multi-agent systems.

Building AI systems in a similar pattern makes it easier to:

- Integrate humans “in the loop”

- Map existing workflows into AI-enhanced versions

- Think in terms of roles, responsibilities, and handoffs

5. Real-World Examples of Multi-Agent Systems

Multi-agent ideas are everywhere once you start looking.

Robotics & Swarm Systems

- Fleets of drones mapping farmland or disaster zones

- Swarm robots cleaning warehouses or doing inventory

- Self-driving cars coordinating at intersections

Finance & Markets

- Many autonomous trading bots interacting in real time

- Auction systems (ad bidding, marketplaces)

- Pricing agents adjusting offers based on competition

Games & Simulations

- Multi-agent RL in complex games (e.g., team-based esports environments)

- Simulations of traffic, crowds, epidemics, or supply chains

- AI opponents in strategy games cooperating or competing

Modern AI Workflows

- A research agent + a summarizer + a fact-checker working together on a report

- A content pipeline: idea generator → outline creator → writer → editor → social repurposer

- Customer service: triage bot → FAQ bot → escalation bot → human agent

Technical Insight: Many new AI “agent frameworks” (like LangChain+LangGraph, AutoGen, OpenAI’s agent orchestration tools, etc.) are essentially multi-agent system toolkits: they help you define roles, communication channels, and workflows between agents, all powered by LLMs and tools.

6. LLM-Based Multi-Agent Systems

Large Language Models make it easier than ever to build multi-agent systems because:

- Each agent can be an LLM with a different prompt/role

- Communication between agents can simply be natural language messages

- Tools and APIs can be exposed as functions that agents can call

Example: Multi-Agent Research & Writing Team

Imagine you want to write a long, well-researched article:

- Research Agent – searches the web, extracts key points, compiles notes.

- Planner Agent – turns notes into an outline and section plan.

- Writer Agent – drafts each section following the outline and style guide.

- Reviewer Agent – checks for clarity, tone, and hallucinations; suggests edits.

All of these agents are just LLMs with:

- Different system prompts (roles)

- Access to different tools (search, database, etc.)

- A shared memory or document store to exchange info

Technical Insight: In LLM-based MAS, the “protocol” between agents is often just structured messages (e.g., JSON + natural language). A controller or graph engine decides which agent handles which stage, and passes along the conversation history + state. This keeps the system interpretable and easier to debug.

7. Challenges in Multi-Agent Systems

MAS are powerful—but they’re not free.

Coordination & Deadlocks

- Agents can “talk in circles” or get stuck in loops.

- Without clear termination conditions, they might never decide they’re done.

Credit Assignment

- When many agents are involved, it’s hard to know who helped and who hurt the outcome.

- This complicates learning and optimization (especially in RL settings).

Communication Overhead

- Too much communication = slow, expensive, and noisy.

- Too little communication = agents make poor decisions due to missing context.

Safety & Alignment

- When agents can act autonomously (send emails, trigger actions, modify data), you must ensure:

- They follow policies and constraints.

- They don’t collectively create harmful behavior even if each one is “locally” safe.

Technical Insight: A common pattern is to introduce coordination agents (routers, managers, schedulers) and governor/critic agents (safety, compliance, quality). These meta-agents don’t “do the work” themselves but supervise and organize other agents, similar to managers and auditors in a human organization.

8. How to Start Using Multi-Agent Ideas in Your Own Projects

You don’t have to build a huge MAS from day one. Start simple:

Step 1: Split Roles Conceptually

Take an existing AI workflow and ask:

- “What are the distinct roles here?”

- “What if I separate them into different agents?”

Example: Job application helper:

- Agent A: Analyze resume vs job description

- Agent B: Suggest improvements

- Agent C: Draft a tailored cover letter

Step 2: Define Clear Responsibilities

For each agent, specify:

- Role (Who are you?)

- Goal (What are you trying to do?)

- Inputs (What do you receive?)

- Outputs (What do you produce?)

- Tools (What are you allowed to use?)

Step 3: Design the Conversation / Flow

- In what order do agents run?

- Who talks to whom?

- When do you stop (termination condition)?

Even a simple three-agent pipeline can dramatically improve structure and quality vs one giant prompt.

Step 4: Add Guardrails

- Limit what each agent can do.

- Add a final “Reviewer” or “Safety” step before anything is sent to users or external systems.

- Log interactions so you can debug and improve.

Conclusion

Multi-Agent Systems are not just an academic concept—they’re quickly becoming the default way to think about complex AI applications.

By combining multiple agents that:

- Have clear roles

- Communicate and coordinate

- Use tools and memory

- Operate under guardrails

…you can build systems that are more scalable, more robust, and closer to how real teams and organizations work.

As AI continues to evolve, understanding how to design, coordinate, and govern multi-agent systems will be a key skill for anyone building serious AI products and workflows.

Stay tuned to BotCampusAI for more guides on multi-agent architectures, practical agent workflows, and step-by-step examples you can plug directly into your own projects.